Chances are you've been underwhelmed by Apple Intelligence, but a combination of unrealistic expectations, fictional narratives, and ignored progress won't stop it from being a key part of Apple's ecosystem.

The world viewed WWDC 2024 with middling expectations for Apple's late entry into the artificial intelligence race. The slow rollout and limited functions caused an expected yawn from analysts that were used to the grift and glamour promised by other tech companies, and that should be expected by now.

The protracted exhale of doom and gloom reporting with a ranging history and speculation on Apple's AI initiatives from The Information. Much of the information has been previously covered by Bloomberg, but it did detail the history of Apple's foundation model team.

However, my focus is on the expected narrative around Apple's supposed internal confusion around AI and its strategy (or lack thereof) resulting in discontent and staff departures. As always, it's important to remember that "people familiar with the matter" are likely disgruntled employees excited to speak about their pet peeves, and the coverage will likely lean into any negative narratives that help amplify a story.

The reality is the artificial intelligence industry is large, complex, and full of momentum that results in more flux and noise than even Apple is used to dealing with. It would be impossible to ignore the allure of working on what is promised to be the future of AI, superintelligence, with a giant pay package larger than Apple's CEO.

Apple's true AI problem

The issue isn't that Apple got caught off guard or has some kind of talent brain drain. It's the usual problem with Apple that the world has with the company — its pursuit of consumer privacy and maintaining product plan secrecy.

Both of these facets are polar opposites of the AI mantra that requires being loud, declaring the end of civilization with every point update, and sucking up every ounce of data available, legal or not. That said, Apple's offerings are very different from what we've seen from other AI companies and certainly come off as underwhelming compared to seemingly sentient AI chatbots.

There's no good way to make advanced autocorrect sound interesting. Apple Intelligence launched with Writing Tools that edit, manipulate, or generate text, Image Playground with poorly made renderings of people and emoji, and notification summaries that caught a lot of flack from news publications.

On the range between Apple's worst launch, Apple Maps, and iPhone, it's closer to Apple Maps on the spectrum. However, it's not the absolute disaster being peddled by many reports on the technology.

For the most part, people outside of the tech space likely have some idea what artificial intelligence is and have no idea that Apple even has a version running on their iPhone. Apple's strategy in the space is to get out of the way and offer the technology in ways that enhance the user experience without always shouting that it is an AI feature.

The most you'll see of Apple indicating Apple Intelligence is in use is the shiny rainbow elements that show up in the UI. A lot of Apple Intelligence isn't even explained beyond a tiny symbol in a summary, if a sign of AI is shown at all.

Apple's strategy going forward, offering developers and users direct access to the foundation models, is to get further out of the way. There will be times Apple Intelligence is in use that the user will never even realize — and that's the point.

Which is the perceived problem with Apple Intelligence. It isn't here to allegedly replace jobs or discover "new" physics, but it is here to improve workflows and help users with mundane tasks.

AI reporting's red herrings

One of the biggest misses that has been repeated around Apple Intelligence since its launch is the idea of Apple being behind or caught off guard by AI. Rewind to before ChatGPT's debut, and Apple was at the forefront of machine learning and was even offering Neural Engines in all of its products before it was an industry standard.

Once the industry learned that the catch-all term of "artificial intelligence" was being used everywhere, even in place of the more accurate machine learning term, demand for that term skyrocketed. Instead of misleading customers, Apple continued referring to its machine learning tools as such, and waited to call anything AI until it was using generative technologies.

The report suggests that Giannandrea was so disconnected from the idea that LLMs could be useful that he stopped Apple from pursuing them in 2022 when Ruoming Pang, the now-departed head of Apple's foundation model team, introduced them. That narrative is likely accurate, but it leaves out the fact that LLMs were largely useless in their debut state as incredibly hallucinatory chatbots.

The story around Apple Intelligence continues to suggest that Apple's executive team woke up sometime in 2023 and realized the technology was important and should be pursued. However, this very same report suggests that Apple's teams under Giannandrea had been working on various AI technologies since they were put together in 2018.

The reality is much more simple — analysts and pundits are unhappy with how Apple has approached AI so far. They seem to have completely missed what Apple's strategy is and continue to push the idea Apple is behind instead of considering the company's own direction.

Of course, I won't ignore that there has been some reshuffling of leadership internally as Apple has adjusted how it wants to tackle AI. Craig Federighi is now overseeing Apple's AI initiatives, and the company is looking externally to bolster its efforts.

However, instead of celebrating Apple's ability to develop both its internal models while seeking to give customers options, reports suggest Apple is giving up on its own efforts. There don't appear to be any signs of that actually occurring beyond these reports from anonymous employees.

Apple's true AI goals

For some reason, everyone seems completely ready to accept that Apple is just going to hire OpenAI or Anthropic to build the backend of Siri while alienating its own teams. This incredible departure from Apple's usual strategy of vertical integration has just been accepted as expected because, given the narrative around how behind Apple is, the company would have no other choice but to give up.

Instead, when framed from a company that knows what it is doing and is actively evolving its strategy to play in the consistently exaggerated AI field, it's easy to see where Apple may be going with Apple Intelligence. It starts with app intents and ends with a private AI ecosystem.

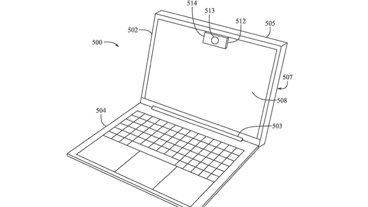

Apple's executive team likely stepped in and surprised the Apple Intelligence team with its announcement of the contextual AI and Siri delay. The report reveals what I've suspected all along — that Apple had a working version ready to ship, but the failure rate was below Apple's incredible standards.

The primary example Apple showed off with the new contextual Siri that relies on app intents is the mom at the airport scenario. Imagine if there was a 30% chance that it told you the wrong time, or even the wrong airport or wrong day.

If poorly summarized headlines made the news, wait and see what would happen if one person out of billions of users goes to the wrong airport.

Apple's pursuit of perfection with AI is likely a folly since the technology is inherently failure-prone. It can't think or reason, so unless there are some incredible checks and balances with human intervention, there likely will never be a 100% guarantee of accuracy.

Whatever system Apple devises to help overcome this limitation of AI, it'll likely debut later in 2025 or early 2026 during the iOS 26 cycle. I have no doubt that a version of that technology is coming, even as Apple further utilizes app intents in other ways across its platforms.

Where the contextual, on-device, and private Apple Intelligence will be game-changing on its own, it's nothing compared to what could come next. Apple's willingness to develop its models while still giving users access to others will be its ultimate victory in the space.

I don't believe Apple is going to replace the Siri backend with Claude or ChatGPT. That reporting seems to be the result of pundit wishcasting and the desire to see Apple fail.

Instead, Apple seems to be in talks with OpenAI, Anthropic, and others to bring models of their popular AI programs to Private Cloud Compute. If that happens, it goes well beyond Siri simply shaking hands with ChatGPT over a privacy-preserving connection — it guarantees user data will never be used by third parties.

If Apple's pitch for Private Cloud Compute is a server-side AI that runs with the same privacy and security measures as an on-device AI, then imagine those same conditions applying to ChatGPT.

The future of Apple Intelligence is a combination of contextually aware local models running on devices with access to third-party models running via Private Cloud Compute. Users would be able to choose which models suit their needs in the moment and submit data without fear of it becoming part of training data.

The Apple Intelligence ecosystem would be the only one built from the ground up for ethical artificial intelligence. Regardless of model, on device or in Apple's servers, it would run privately, securely, and on renewable energy.

In a world filled with exaggerations around AI and its future, we should celebrate the grounded and realistic promises. Artificial intelligence is a tool, like a hammer, that can enhance human productivity, not replace it, and Apple's strategy reflects that.

Apple's tagline for Apple Intelligence is "AI for the rest of us." And by providing billions of Apple customers access to sustainable, ethical, private, and secure AI models, I'd argue Apple is pursuing a potential future that's not only realistic, but places it well ahead of the competition.