Live translation in iOS 26 will turn the iPhone into a real-time interpreter for calls, messages and video chats, all without leaving your app or sending data to the cloud. Here's how it works.

Live translation shows up where you already talk in Messages, FaceTime, and calls. It provides real-time, on-device interpretation of conversations without sending data to the cloud.

The feature is part of Apple Intelligence, a new system-wide framework for generative AI that works directly on supported devices. Live translation processes spoken and written conversations entirely on the iPhone.

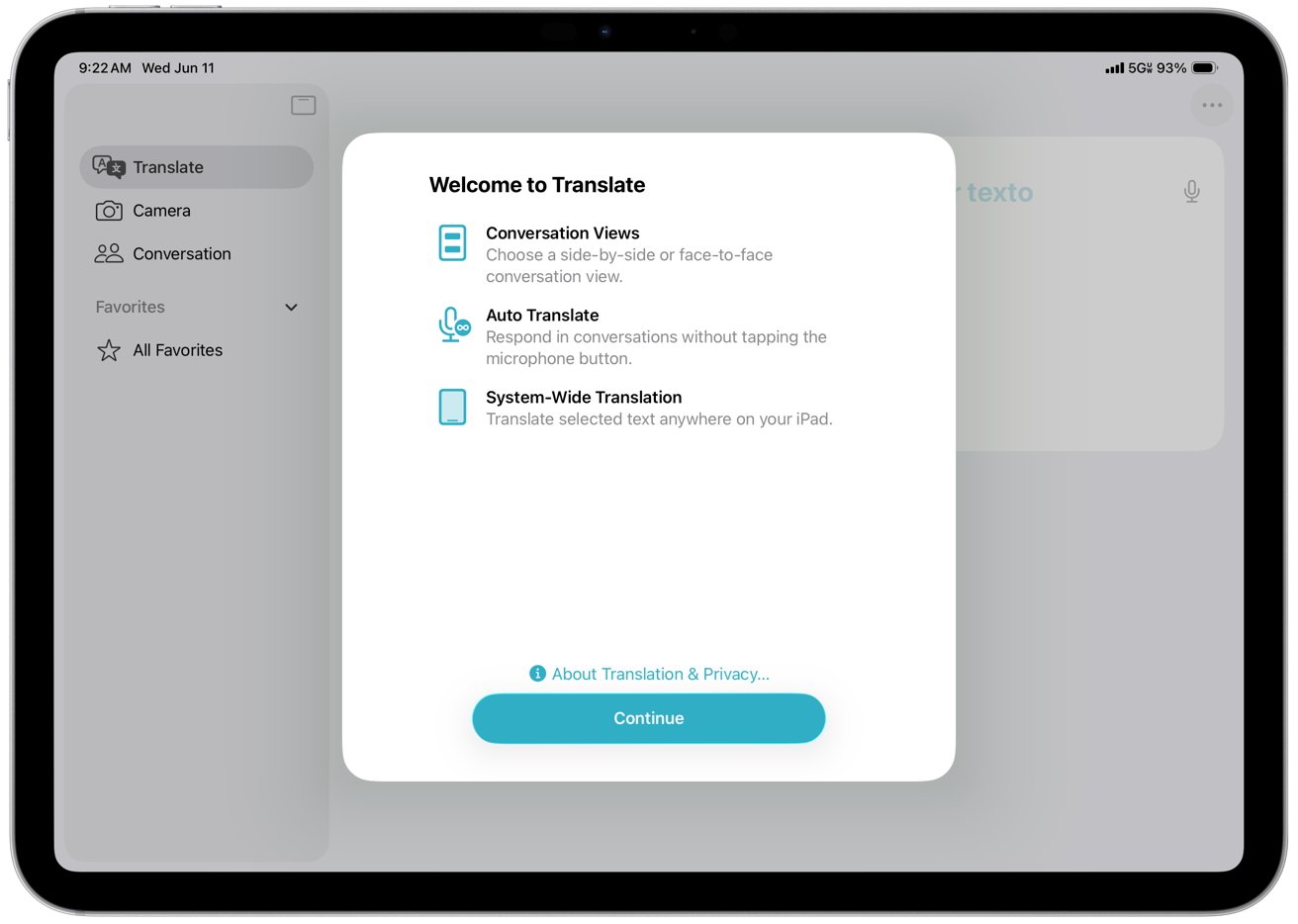

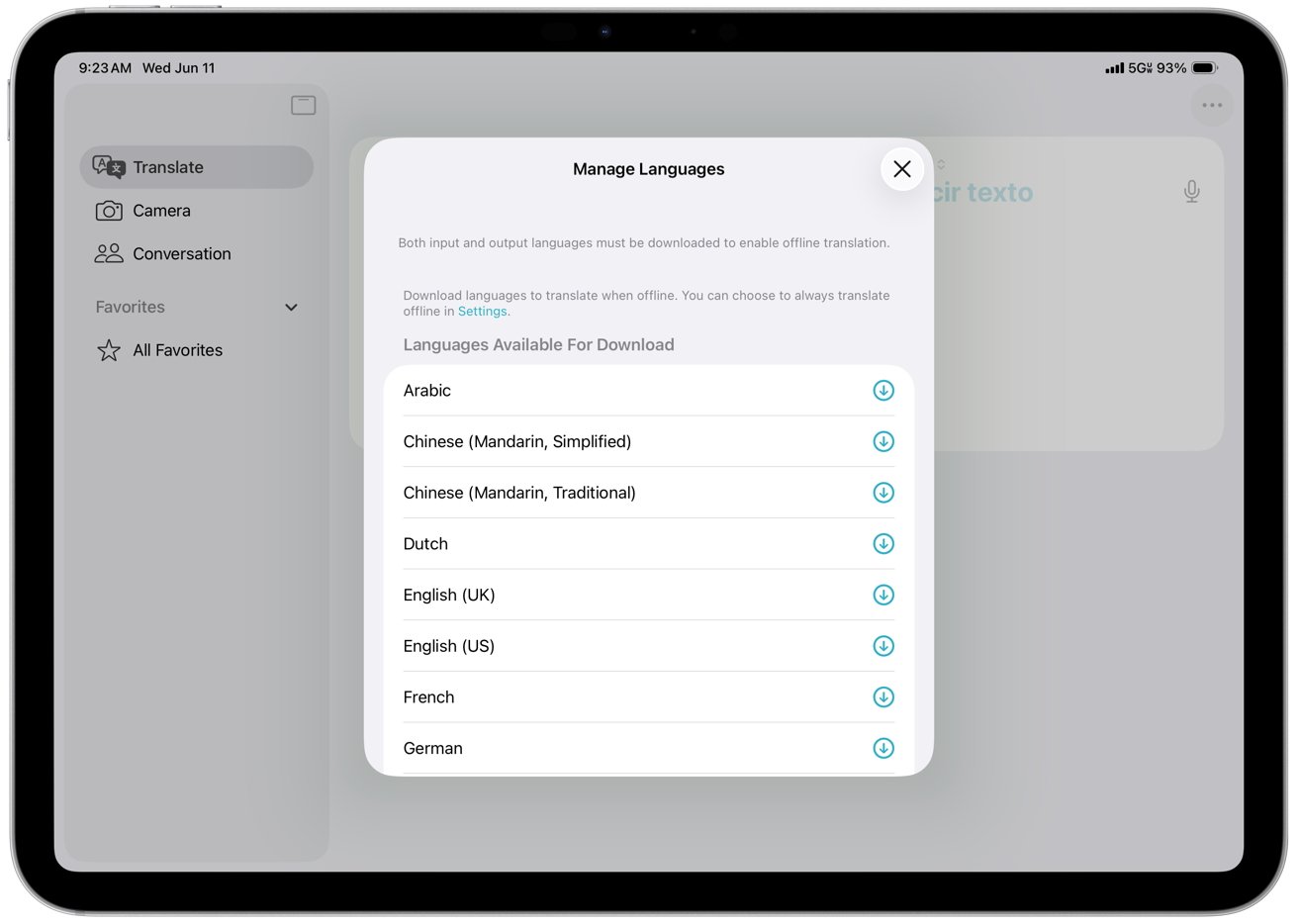

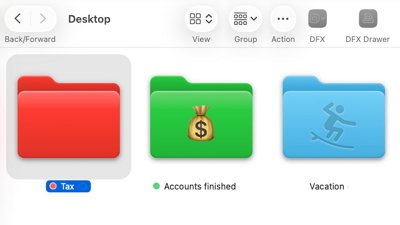

It's not entirely magic though. You'll have to download the language models necessary for the translation. After that you're good to go.

Talk across languages in real time

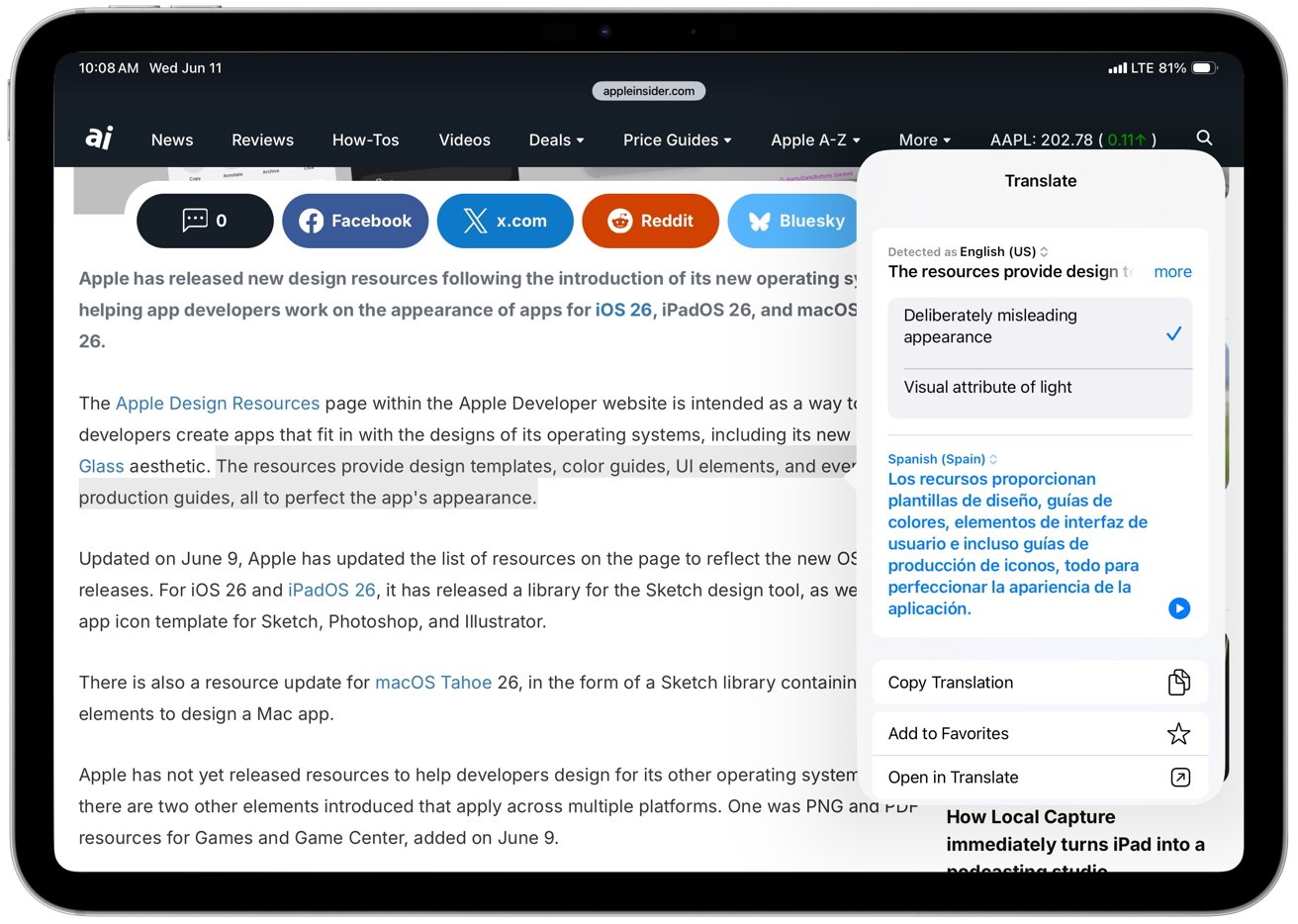

Live translation lets users type a message in their own language and have it delivered in the recipient's language. In FaceTime, the feature provides live captions during video calls. For audio-only calls, it can translate and speak the conversation aloud.

The translation is handled by Apple's on-device foundation models, meaning the system works offline and doesn't shares transcripts or voice data externally.

The technology is integrated into the Phone app, FaceTime, and Messages, making it available for real-time speech and text. Apple has emphasized that this approach prioritizes speed and security, keeping conversations local to the device.

So, there's no need to shout into your phone hoping Siri gets it.

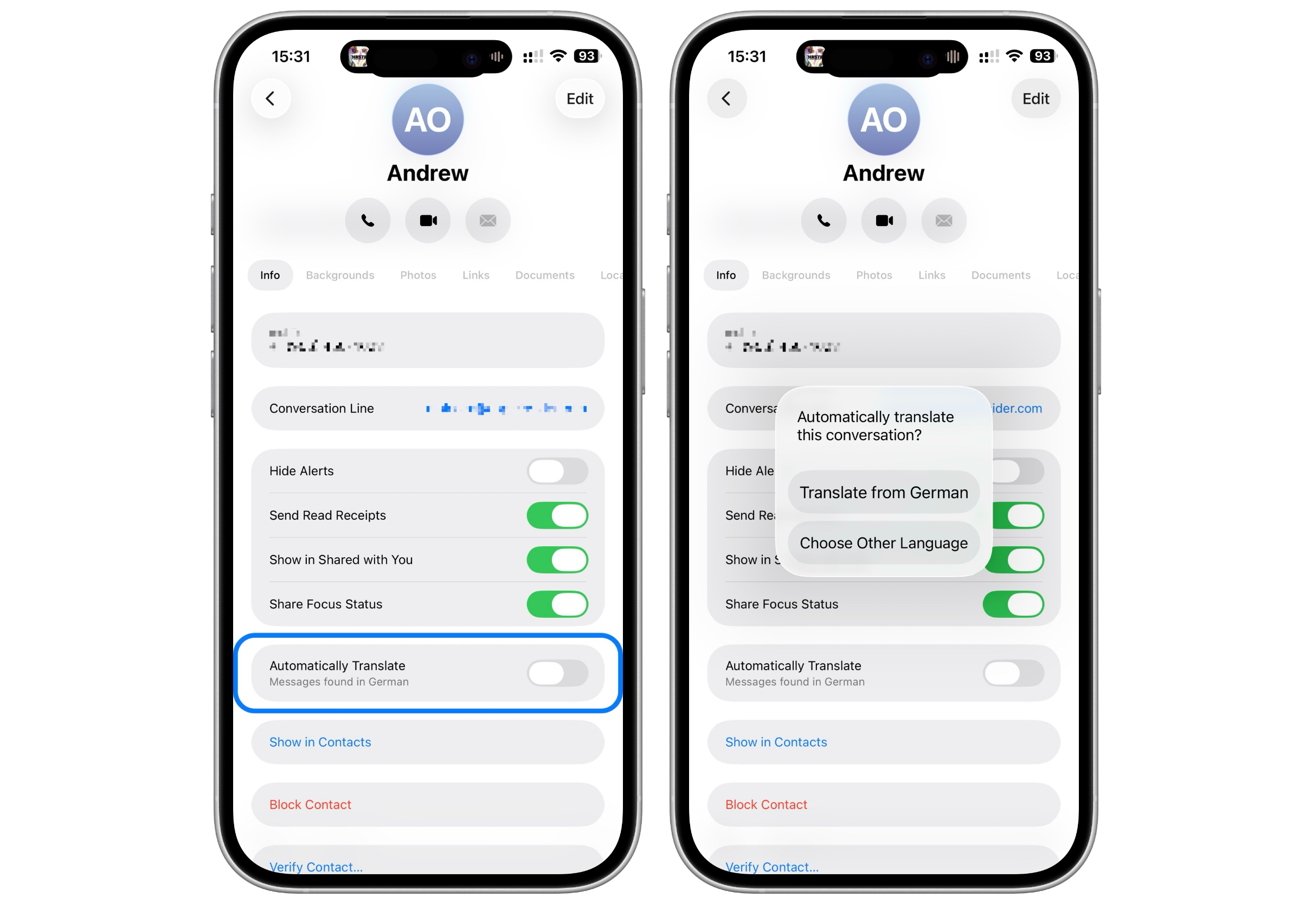

We tested the new live translation feature in iOS 26 to see how it handled real-time messaging. We sent texts in German, and the recipient's iPhone automatically recognized the language.

It didn't work perfectly. Some lines were delayed or didn't translate at all, but that's expected since we're running the beta and it's buggy.

Device and language support

While iOS 26 will be available on iPhone 11 and newer, live translation is restricted to iPhone 15 Pro, iPhone 15 Pro Max, and all iPhone 16 models. Users must have Apple Intelligence enabled, and both Siri and device language set to a supported option.

The supported languages vary by app. In Messages, live translation works with English (U.S., UK), French, German, Italian, Japanese, Korean, Portuguese, Spanish, and Chinese Simplified.

For Phone and FaceTime, support is narrower at launch. It's limited to English (U.S., UK), French, German, Portuguese, and Spanish. Apple says more languages will be added by the end of 2025.

How Apple differs from Google and Samsung

Google and Samsung already offer similar live translation features, but most rely on cloud processing. Google Translate and Live Translate in Android phones support dozens of languages across older and newer models.

Apple is taking a more conservative path by requiring the latest chips and restricting access to certain apps. Where the company hopes to stand out is privacy.

All translation occurs on-device and the system is deeply integrated into iOS. It offers smoother experiences in Messages and FaceTime than many third-party alternatives. The tradeoff is limited availability for now.

Live translation is a meaningful upgrade for travelers, international families, and business users working across borders. Unlike standalone apps, Apple's solution is embedded in everyday communication tools.

Live translation joins other Apple Intelligence features like smart writing tools, image generation, and personalized summaries.

Connected to Apple's new design era

The release arrives alongside iOS 26's "Liquid Glass" design overhaul, Apple's most significant visual redesign since iOS 7. The interface now uses translucent effects and dynamic motion to give content more depth and focus.

While Live Translation is driven by functionality, it's part of a broader shift in how Apple wants users to see and use their devices — more intuitive, expressive, and intelligent.

Apple's live translation feature brings real-time multilingual communication to native iPhone apps without compromising user privacy. For users with the latest devices, it's one of the most useful applications of Apple Intelligence so far.

Andrew Orr

Andrew Orr

-m.jpg)

Charles Martin

Charles Martin

Wesley Hilliard

Wesley Hilliard

Christine McKee

Christine McKee

William Gallagher

William Gallagher

2 Comments

I have a Cruise scheduled for late October that commences in Korea and then 12 days around Japan ending in Tokyo. I know some pleasantries and greeting in Japanese and really look forward to using this. Hope it is released by then, especially the link to AirPods Pro.

Why didn’t Apple simply make a translation tool whereby I can expose my iPhone to discussions around me and it transcribes languages around me I don’t understand?